Bonjour à tous.

Je viens de finaliser l’installation de mon bluemind (avec spamassassin, opendkim, amavis, etc…) et de finir de migrer mes données depuis Zimbra. J’ai tout mes anciens mails, je peux envoyer et recevoir des mails sans soucis, et le connecteur Bluemind sur Thunderbird marche. J’ai même paramétrer l’addon nextcloud pour le serveur bluemind et tout est ok.

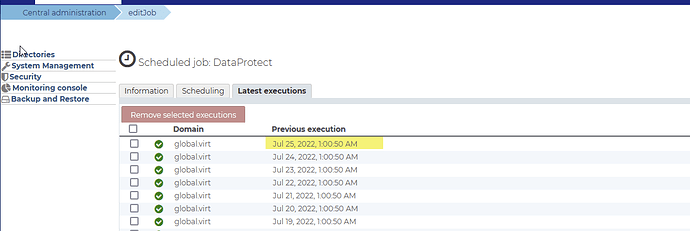

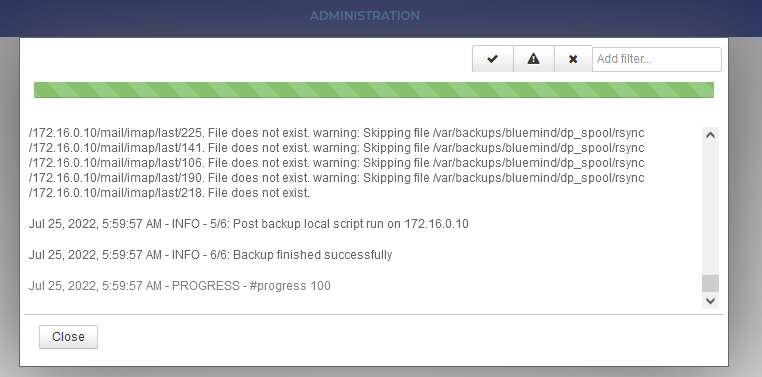

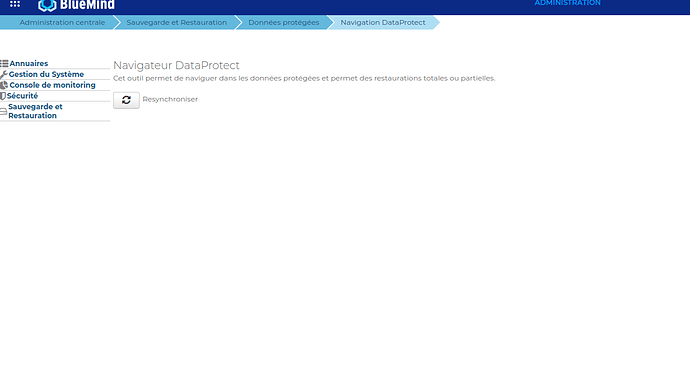

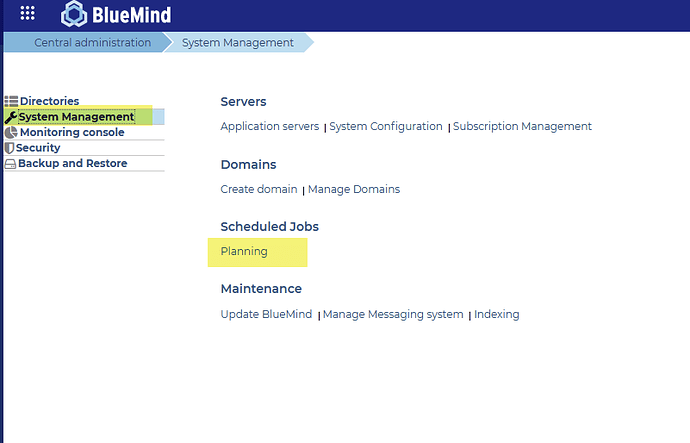

Là où ça merde, c’est pour les sauvegardes. Apparemment elle ne se font pas …

root@hal-bluemind:~# du -sh /var/backups/bluemind/

6,3M /var/backups/bluemind/

Je ne sais pas trop par où chercher pour que ça marche.

le dossier /var/backups/bluemind est monté depuis un partage NFS sur une autre machine.

Quelqu’un pourrait-il m’aider ?

Je voulais vous mettre en fichier joint mon fichier core.log anonymiser, mais on peut upload que des images, et je voudrais pas faire un copier/coller ici d’un fichier de plusieurs 10k lignes…

Je vous mets donc quelques morceaux où il y a FAILURE/ERROR

2022-07-24 01:00:01,889 [pool-9-thread-2] n.b.s.s.i.Scheduler ERROR - finished with FAILURE status called from here

java.lang.Throwable: sched.finish(FAILURE)

at net.bluemind.scheduledjob.scheduler.impl.Scheduler.finish(Scheduler.java:152)

at net.bluemind.dataprotect.job.DataProtectJob.tick(DataProtectJob.java:125)

at net.bluemind.scheduledjob.scheduler.impl.JobTicker.run(JobTicker.java:71)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2022-07-24 01:00:01,898 [pool-8-thread-3] n.b.s.s.i.Scheduler INFO - [RunIdImpl [domainUid=global.virt, jid=DataProtect, startTime=1658624401268, endTime=1658624401895, groupId=3f331ada-9fc9-48f4-8f7f-7b1e4fc26deb, status=FAILURE]] finished and recorded: FAILURE, duration: 627ms.

2022-07-25 01:02:49,560 [pool-9-thread-4] n.b.s.s.i.Scheduler ERROR - finished with FAILURE status called from here

java.lang.Throwable: sched.finish(FAILURE)

at net.bluemind.scheduledjob.scheduler.impl.Scheduler.finish(Scheduler.java:152)

at net.bluemind.dataprotect.job.DataProtectJob.tick(DataProtectJob.java:125)

at net.bluemind.scheduledjob.scheduler.impl.JobTicker.run(JobTicker.java:71)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

2022-07-25 01:02:49,567 [pool-8-thread-1] n.b.s.s.i.Scheduler INFO - [RunIdImpl [domainUid=global.virt, jid=DataProtect, startTime=1658710857895, endTime=1658710969561, groupId=cfeaf9c8-a0b3-4854-aeb0-b855f026df4d, status=FAILURE]] finished and recorded: FAILURE, duration: 111666ms.

2022-07-24 07:14:16,901 [BM-Core-27] n.b.u.s.i.TokenAuthProvider ERROR - Fail to validate token for admin0 from [127.0.1.1, IP-freebox, IP-srv-save, 127.0.0.1, IP-srvbluemind]

2022-07-24 07:14:16,952 [BM-Core-27] n.b.a.s.Authentication INFO - login: 'admin0@global.virt', origin: 'bm-hps', from: '[127.0.1.1, IP-freebox, IP-srv-save, 127.0.0.1, IP-srvbluemind]' successfully authentified (status: Ok)

2022-07-24 07:14:18,235 [BM-Core-3] n.b.c.c.s.i.ContainerStoreService WARN - null value for existing item Item{id: 7, uid: admin0_global.virt, dn: admin0 admin0, v: 12} with store net.bluemind.mailbox.persistence.MailboxStore@173bf279

2022-07-24 07:19:18,129 [vert.x-eventloop-thread-4] n.b.c.r.s.v.RestSockJSProxyServer ERROR - error in sock io.vertx.ext.web.handler.sockjs.impl.SockJSSession@e47b5e7: {}

io.vertx.core.http.HttpClosedException: Connection was closed

2022-07-24 07:21:31,625 [core-heartbeat-timer] n.b.s.s.StateContext INFO - Core state heartbeat : core.state.running

2022-07-24 07:21:33,639 [hz.bm-core-b455fcc9-df3a-434f-aff9-86d02876a16e.async.thread-6] n.b.m.c.s.ProductChecksService ERROR - [Autodiscover@bm-mapi] Status CRIT (null)

2022-07-24 07:21:34,199 [hz.bm-core-b455fcc9-df3a-434f-aff9-86d02876a16e.IO.thread-in-2] c.h.n.t.TcpIpConnection INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Connection[id=10, /IP-srvbluemind:57

01->/IP-srvbluemind:35385, qualifier=null, endpoint=[IP-srvbluemind]:35385, alive=false, type=JAVA_CLIENT] closed. Reason: Connection closed by the other side

2022-07-24 07:21:34,204 [hz.bm-core-b455fcc9-df3a-434f-aff9-86d02876a16e.event-1] c.h.c.i.ClientEndpointManager INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Destroying ClientEndpoint{connection=

Connection[id=10, /IP-srvbluemind:5701->/IP-srvbluemind:35385, qualifier=null, endpoint=[IP-srvbluemind]:35385, alive=false, type=JAVA_CLIENT], principal='ClientPrincipal{uuid='e28324d7-78e1-4f22-ba39-7730dc88f69a', ownerUuid='510d67c5-

9b23-4732-b142-7e52cc9734cd'}, ownerConnection=true, authenticated=true, clientVersion=3.12.12, creationTime=1658647282379, latest statistics=null}

2022-07-24 07:21:35,624 [core-heartbeat-timer] n.b.s.s.StateContext INFO - Core state heartbeat : core.state.running

2022-07-24 07:26:23,271 [hz.bm-core-b455fcc9-df3a-434f-aff9-86d02876a16e.partition-operation.thread-2] c.h.r.i.o.ReadManyOperation ERROR - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] sequence:2 is too large. The current tailSequence is:-1

java.lang.IllegalArgumentException: sequence:2 is too large. The current tailSequence is:-1

at com.hazelcast.ringbuffer.impl.RingbufferContainer.checkBlockableReadSequence(RingbufferContainer.java:454)

at com.hazelcast.ringbuffer.impl.operations.ReadManyOperation.beforeRun(ReadManyOperation.java:58)

at com.hazelcast.spi.impl.operationservice.impl.OperationRunnerImpl.run(OperationRunnerImpl.java:197)

at com.hazelcast.spi.impl.operationexecutor.impl.OperationThread.process(OperationThread.java:147)

at com.hazelcast.spi.impl.operationexecutor.impl.OperationThread.process(OperationThread.java:125)

at com.hazelcast.spi.impl.operationexecutor.impl.OperationThread.run(OperationThread.java:110)

2022-07-24 07:26:23,291 [hz.bm-core-b455fcc9-df3a-434f-aff9-86d02876a16e.event-2] c.h.c.i.ClientEndpointManager INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Destroying ClientEndpoint{connection=Connection[id=2, /IP-srvbluemind:5701->/IP-srvbluemind:36279, qualifier=null, endpoint=[IP-srvbluemind]:36279, alive=false, type=JAVA_CLIENT], principal='ClientPrincipal{uuid='ea8f078a-3793-4912-949f-982e7080f118', ownerUuid='510d67c5-9b23-4732-b142-7e52cc9734cd'}, ownerConnection=true, authenticated=true, clientVersion=3.12.12, creationTime=1658574751873, latest statistics=null}

2022-07-24 07:26:23,433 [hz.bm-core-b455fcc9-df3a-434f-aff9-86d02876a16e.partition-operation.thread-6] c.h.r.i.o.ReadManyOperation ERROR - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] sequence:11 is too large. The current tailSequence is:-1

java.lang.IllegalArgumentException: sequence:11 is too large. The current tailSequence is:-1

at com.hazelcast.ringbuffer.impl.RingbufferContainer.checkBlockableReadSequence(RingbufferContainer.java:454)

at com.hazelcast.ringbuffer.impl.operations.ReadManyOperation.beforeRun(ReadManyOperation.java:58)

at com.hazelcast.spi.impl.operationservice.impl.OperationRunnerImpl.run(OperationRunnerImpl.java:197)

at com.hazelcast.spi.impl.operationexecutor.impl.OperationExecutorImpl.run(OperationExecutorImpl.java:408)

at com.hazelcast.spi.impl.operationexecutor.impl.OperationExecutorImpl.runOrExecute(OperationExecutorImpl.java:435)

at com.hazelcast.spi.impl.operationservice.impl.Invocation.doInvokeLocal(Invocation.java:648)

at com.hazelcast.spi.impl.operationservice.impl.Invocation.doInvoke(Invocation.java:633)

at com.hazelcast.spi.impl.operationservice.impl.Invocation.invoke0(Invocation.java:592)

at com.hazelcast.spi.impl.operationservice.impl.Invocation.invoke(Invocation.java:256)

at com.hazelcast.spi.impl.operationservice.impl.InvocationBuilderImpl.invoke(InvocationBuilderImpl.java:61)

at com.hazelcast.client.impl.protocol.task.AbstractPartitionMessageTask.processMessage(AbstractPartitionMessageTask.java:67)

at com.hazelcast.client.impl.protocol.task.AbstractMessageTask.initializeAndProcessMessage(AbstractMessageTask.java:137)

at com.hazelcast.client.impl.protocol.task.AbstractMessageTask.run(AbstractMessageTask.java:117)

at com.hazelcast.spi.impl.operationservice.impl.OperationRunnerImpl.run(OperationRunnerImpl.java:163)

at com.hazelcast.spi.impl.operationexecutor.impl.OperationThread.process(OperationThread.java:159)

at com.hazelcast.spi.impl.operationexecutor.impl.OperationThread.process(OperationThread.java:127)

at com.hazelcast.spi.impl.operationexecutor.impl.OperationThread.run(OperationThread.java:110)

2022-07-24 07:26:23,624 [core-heartbeat-timer] n.b.s.s.StateContext INFO - Core state heartbeat : core.state.running

2022-07-24 07:26:23,694 [hz.bm-core-b455fcc9-df3a-434f-aff9-86d02876a16e.IO.thread-in-1] c.h.n.t.TcpIpConnection WARN - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Connection[id=4, /IP-srvbluemind:5701->/IP-srvbluemind:44983, qualifier=null, endpoint=[IP-srvbluemind]:44983, alive=false, type=JAVA_CLIENT] closed. Reason: Exception in Connection[id=4, /IP-srvbluemind:5701->/IP-srvbluemind:44983, qualifier=null, endpoint=[IP-srvbluemind]:44983, alive=true, type=JAVA_CLIENT], thread=hz.bm-core-b455fcc9-df3a-434f-aff9-86d02876a16e.IO.thread-in-1

java.io.IOException: Connection reset by peer

at sun.nio.ch.FileDispatcherImpl.read0(Native Method)

at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39)

at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223)

at sun.nio.ch.IOUtil.read(IOUtil.java:197)

at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:379)

at com.hazelcast.internal.networking.nio.NioInboundPipeline.process(NioInboundPipeline.java:113)

at com.hazelcast.internal.networking.nio.NioThread.processSelectionKey(NioThread.java:369)

at com.hazelcast.internal.networking.nio.NioThread.processSelectionKeys(NioThread.java:354)

at com.hazelcast.internal.networking.nio.NioThread.selectLoop(NioThread.java:280)

at com.hazelcast.internal.networking.nio.NioThread.run(NioThread.java:235)

2022-07-24 07:26:23,695 [hz.bm-core-b455fcc9-df3a-434f-aff9-86d02876a16e.event-3] c.h.c.i.ClientEndpointManager INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Destroying ClientEndpoint{connection=Connection[id=4, /IP-srvbluemind:5701->/IP-srvbluemind:44983, qualifier=null, endpoint=[IP-srvbluemind]:44983, alive=false, type=JAVA_CLIENT], principal='ClientPrincipal{uuid='0fe70a0c-9e2f-44fe-ba60-d8e31f468d91', ownerUuid='510d67c5-9b23-4732-b142-7e52cc9734cd'}, ownerConnection=true, authenticated=true, clientVersion=3.12.12, creationTime=1658574756254, latest statistics=null}

2022-07-24 07:26:24,179 [hz.bm-core-b455fcc9-df3a-434f-aff9-86d02876a16e.IO.thread-in-1] c.h.n.t.TcpIpConnection INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Connection[id=3, /IP-srvbluemind:5701->/IP-srvbluemind:41823, qualifier=null, endpoint=[IP-srvbluemind]:41823, alive=false, type=JAVA_CLIENT] closed. Reason: Connection closed by the other side

2022-07-24 07:26:24,180 [hz.bm-core-b455fcc9-df3a-434f-aff9-86d02876a16e.event-3] c.h.c.i.ClientEndpointManager INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Destroying ClientEndpoint{connection=Connection[id=3, /IP-srvbluemind:5701->/IP-srvbluemind:41823, qualifier=null, endpoint=[IP-srvbluemind]:41823, alive=false, type=JAVA_CLIENT], principal='ClientPrincipal{uuid='2222756a-94ac-4e39-b968-ceff7b9fa027', ownerUuid='510d67c5-9b23-4732-b142-7e52cc9734cd'}, ownerConnection=true, authenticated=true, clientVersion=3.12.12, creationTime=1658574756129, latest statistics=null}

2022-07-24 07:26:24,203 [hz.ShutdownThread] c.h.i.Node INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Running shutdown hook... Current state: ACTIVE

2022-07-24 07:26:24,204 [Thread-7] n.b.a.l.ApplicationLauncher INFO - Stopping BlueMind Core...

2022-07-24 07:26:24,204 [Thread-7] n.b.s.s.StateContext INFO - Core state transition from core.state.running to core.stopped

2022-07-24 07:26:24,205 [hz.ShutdownThread] c.h.c.LifecycleService INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] [IP-srvbluemind]:5701 is SHUTTING_DOWN

2022-07-24 07:26:24,206 [hz.ShutdownThread] n.b.h.c.i.ClusterMember INFO - HZ cluster switched to state SHUTTING_DOWN, running: true

2022-07-24 07:26:24,210 [hz.ShutdownThread] c.h.i.Node WARN - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Terminating forcefully...

2022-07-24 07:26:24,210 [vert.x-worker-thread-18] n.b.s.s.i.StateObserverVerticle INFO - New core state is CORE_STATE_STOPPING, cause: BUS_EVENT

2022-07-24 07:26:24,213 [hz.ShutdownThread] c.h.i.Node INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Shutting down connection manager...

2022-07-24 07:26:24,225 [Thread-7] n.b.a.l.ApplicationLauncher INFO - BlueMind Core stopped.

2022-07-24 07:26:24,232 [hz.ShutdownThread] c.h.i.Node INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Shutting down node engine...

2022-07-24 07:26:24,322 [hz.ShutdownThread] c.h.i.NodeExtension INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Destroying node NodeExtension.

2022-07-24 07:26:24,322 [hz.ShutdownThread] c.h.i.Node INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Hazelcast Shutdown is completed in 113 ms.

2022-07-24 07:26:24,327 [hz.ShutdownThread] c.h.c.LifecycleService INFO - [IP-srvbluemind]:5701 [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] [IP-srvbluemind]:5701 is SHUTDOWN

2022-07-24 07:26:24,327 [hz.ShutdownThread] n.b.h.c.i.ClusterMember INFO - HZ cluster switched to state SHUTDOWN, running: false

2022-07-24 07:27:00,472 [Start Level: Equinox Container: 9338cf65-1bc6-4946-986f-a70679b58c76] OSGI INFO - OSGI Log activated

2022-07-24 07:27:00,481 [Start Level: Equinox Container: 9338cf65-1bc6-4946-986f-a70679b58c76] n.b.s.s.SentrySettingsActivator INFO - Sentry settings activator launched

2022-07-24 07:27:00,523 [main] n.b.a.l.ApplicationLauncher INFO - Starting BlueMind Application...

2022-07-24 07:27:00,542 [main] n.b.h.c.MQ WARN - HZ native client is not possible in this JVM, client fragment missing (com.hazelcast.client.config.ClientConfig cannot be found by net.bluemind.hornetq.client_4.1.62053)

2022-07-24 07:27:00,545 [main] n.b.h.c.MQ INFO - HZ cluster member implementation was chosen for bm-core.

2022-07-24 07:27:00,554 [main] n.b.h.c.i.ClusterMember INFO - ************* HZ CONNECT *************

2022-07-24 07:27:00,567 [main] n.b.h.c.i.ClusterMember INFO - HZ setup for net.bluemind.application.launcher.ApplicationLauncher$$Lambda$51/19717364@1fc2b765....

2022-07-24 07:27:00,570 [main] n.b.a.l.ApplicationLauncher INFO - BlueMind Application started

2022-07-24 07:27:00,779 [bm-hz-connect] c.h.i.AddressPicker INFO - [LOCAL] [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Interfaces is disabled, trying to pick one address from TCP-IP config addresses: [IP-srvbluemind]

2022-07-24 07:27:00,779 [bm-hz-connect] c.h.i.AddressPicker INFO - [LOCAL] [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Prefer IPv4 stack is true, prefer IPv6 addresses is false

2022-07-24 07:27:00,796 [bm-hz-connect] c.h.i.AddressPicker INFO - [LOCAL] [bluemind-72D26E8A-5BB1-48A4-BC71-EEE92E0CE4EE] [3.12.12] Picked [IP-srvbluemind]:5701, using socket ServerSocket[addr=/IP-srvbluemind,localport=5701], bind any local is false

Si vous pouvez m’aider ou me donner des pistes par où chercher, ça serait top.

Je vous remercie en tout cas pour vos futures réponses.

Bonne journée.